Topic 1: Litware, inc

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam

time as you would like to complete each case. However, there may be additional case

studies and sections on this exam. You must manage your time to ensure that you are able

to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information

that is provided in the case study. Case studies might contain exhibits and other resources

that provide more information about the scenario that is described in the case study. Each

question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review

your answers and to make changes before you move to the next section of the exam. After

you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the

left pane to explore the content of the case study before you answer the questions. Clicking

these buttons displays information such as business requirements, existing environment,

and problem statements. If the case study has an All Information tab, note that the

information displayed is identical to the information displayed on the subsequent tabs.

When you are ready to answer a question, click the Question button to return to the

question.

Overview

Litware, Inc. is a United States-based grocery retailer. Litware has a main office and a

primary datacenter in Seattle. The company has 50 retail stores across the United States

and an emerging online presence. Each store connects directly to the internet.

Existing environment. Cloud and Data Service Environments.

Litware has an Azure subscription that contains the resources shown in the following table.

Each container in productdb is configured for manual throughput.

The con-product container stores the company's product catalog data. Each document in

con-product includes a con-productvendor value. Most queries targeting the data in conproduct

are in the following format.

SELECT * FROM con-product p WHERE p.con-productVendor - 'name'

Most queries targeting the data in the con-productVendor container are in the following

format

SELECT * FROM con-productVendor pv

ORDER BY pv.creditRating, pv.yearFounded

Existing environment. Current Problems.

Litware identifies the following issues:

Updates to product categories in the con-productVendor container do not propagate

automatically to documents in the con-product container.

Application updates in con-product frequently cause HTTP status code 429 "Too many

requests". You discover that the 429 status code relates to excessive request unit (RU)

consumption during the updates.

Requirements. Planned Changes

Litware plans to implement a new Azure Cosmos DB Core (SQL) API account named

account2 that will contain a database named iotdb. The iotdb database will contain two

containers named con-iot1 and con-iot2.

Litware plans to make the following changes:

Store the telemetry data in account2.

Configure account1 to support multiple read-write regions.

Implement referential integrity for the con-product container.

Use Azure Functions to send notifications about product updates to different recipients.

Develop an app named App1 that will run from all locations and query the data in account1.

Develop an app named App2 that will run from the retail stores and query the data in

account2. App2 must be limited to a single DNS endpoint when accessing account2.

Requirements. Business Requirements

Litware identifies the following business requirements:

Whenever there are multiple solutions for a requirement, select the solution that provides

the best performance, as long as there are no additional costs associated.

Ensure that Azure Cosmos DB costs for IoT-related processing are predictable.

Minimize the number of firewall changes in the retail stores.

Requirements. Product Catalog Requirements

Litware identifies the following requirements for the product catalog:

Implement a custom conflict resolution policy for the product catalog data.

Minimize the frequency of errors during updates of the con-product container.

Once multi-region writes are configured, maximize the performance of App1 queries

against the data in account1.

Trigger the execution of two Azure functions following every update to any document in the

con-product container.

You need to identify which connectivity mode to use when implementing App2. The

solution must support the planned changes and meet the business requirements.

Which connectivity mode should you identify?

A.

Direct mode over HTTPS

B.

Gateway mode (using HTTPS)

C.

Direct mode over TCP

Direct mode over TCP

Explanation:

Scenario: Develop an app named App2 that will run from the retail stores and query the

data in account2. App2 must be limited to a single DNS endpoint when accessing

account2.

By using Azure Private Link, you can connect to an Azure Cosmos account via a private

endpoint. The private endpoint is a set of private IP addresses in a subnet within your

virtual network.

When you're using Private Link with an Azure Cosmos account through a direct mode

connection, you can use only the TCP protocol. The HTTP protocol is not currently

supported.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-configure-private-endpoints

You are troubleshooting the current issues caused by the application updates.

Which action can address the application updates issue without affecting the functionality

of the application?

A.

Enable time to live for the con-product container.

B.

Set the default consistency level of account1 to strong.

C.

Set the default consistency level of account1 to bounded staleness.

D.

Add a custom indexing policy to the con-product container.

Set the default consistency level of account1 to bounded staleness.

Explanation:

Bounded staleness is frequently chosen by globally distributed applications that expect low

write latencies but require total global order guarantee. Bounded staleness is great for

applications featuring group collaboration and sharing, stock ticker, publishsubscribe/

queueing etc.

Scenario: Application updates in con-product frequently cause HTTP status code 429 "Too

many requests". You discover that the 429 status code relates to excessive request unit

(RU) consumption during the updates.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

You need to select the partition key for con-iot1. The solution must meet the IoT telemetry

requirements.

What should you select?

A.

the timestamp

B.

the humidity

C.

the temperature

D.

the device ID

the device ID

Explanation:

The partition key is what will determine how data is routed in the various partitions by

Cosmos DB and needs to make sense in the context of your specific scenario. The IoT

Device ID is generally the "natural" partition key for IoT applications.

Scenario: The iotdb database will contain two containers named con-iot1 and con-iot2.

Ensure that Azure Cosmos DB costs for IoT-related processing are predictable.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/solution-ideas/articles/iot-usingcosmos-

db

You configure multi-region writes for account1.

You need to ensure that App1 supports the new configuration for account1. The solution

must meet the business requirements and the product catalog requirements.

What should you do?

A.

Set the default consistency level of accountl to bounded staleness.

B.

Create a private endpoint connection.

C.

Modify the connection policy of App1.

D.

Increase the number of request units per second (RU/s) allocated to the con-product

and con-productVendor containers.

Increase the number of request units per second (RU/s) allocated to the con-product

and con-productVendor containers.

Explanation:

App1 queries the con-product and con-productVendor containers.

Note: Request unit is a performance currency abstracting the system resources such as

CPU, IOPS, and memory that are required to perform the database operations supported

by Azure Cosmos DB.

Scenario:

Develop an app named App1 that will run from all locations and query the data in account1.

Once multi-region writes are configured, maximize the performance of App1 queries

against the data in account1.

Whenever there are multiple solutions for a requirement, select the solution that provides

the best performance, as long as there are no additional costs associated.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

You need to provide a solution for the Azure Functions notifications following updates to

con-product. The solution must meet the business requirements and the product catalog

requirements.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A.

Configure the trigger for each function to use a different leaseCollectionPrefix

B.

Configure the trigger for each function to use the same leaseCollectionNair.e

C.

Configure the trigger for each function to use a different leaseCollectionName

D.

Configure the trigger for each function to use the same leaseCollectionPrefix

Configure the trigger for each function to use a different leaseCollectionPrefix

Configure the trigger for each function to use the same leaseCollectionNair.e

Explanation:

leaseCollectionPrefix: when set, the value is added as a prefix to the leases created in the

Lease collection for this Function. Using a prefix allows two separate Azure Functions to

share the same Lease collection by using different prefixes.

Scenario: Use Azure Functions to send notifications about product updates to different

recipients.

Trigger the execution of two Azure functions following every update to any document in the

con-product container.

Reference:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-

trigger

You need to configure an Apache Kafka instance to ingest data from an Azure Cosmos DB

Core (SQL) API account. The data from a container named telemetry must be added to a

Kafka topic named iot. The solution must store the data in a compact binary format.

Which three configuration items should you include in the solution? Each correct answer

presents part of the solution.

NOTE: Each correct selection is worth one point.

A.

"connector.class":

"com.azure.cosmos.kafka.connect.source.CosmosDBSourceConnector"

B.

"key.converter": "org.apache.kafka.connect.json.JsonConverter"

C.

"key.converter": "io.confluent.connect.avro.AvroConverter"

D.

"connect.cosmos.containers.topicmap": "iot#telemetry"

E.

"connect.cosmos.containers.topicmap": "iot"

F.

"connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

"key.converter": "io.confluent.connect.avro.AvroConverter"

"connect.cosmos.containers.topicmap": "iot#telemetry"

"connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

Explanation:

C: Avro is binary format, while JSON is text.

F: Kafka Connect for Azure Cosmos DB is a connector to read from and write data to Azure

Cosmos DB. The Azure Cosmos DB sink connector allows you to export data from Apache

Kafka topics to an Azure Cosmos DB database. The connector polls data from Kafka to

write to containers in the database based on the topics subscription.

D: Create the Azure Cosmos DB sink connector in Kafka Connect. The following JSON

body defines config for the sink connector.

Extract:

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"key.converter": "org.apache.kafka.connect.json.AvroConverter"

"connect.cosmos.containers.topicmap": "hotels#kafka"

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/kafka-connector-sink

https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained/

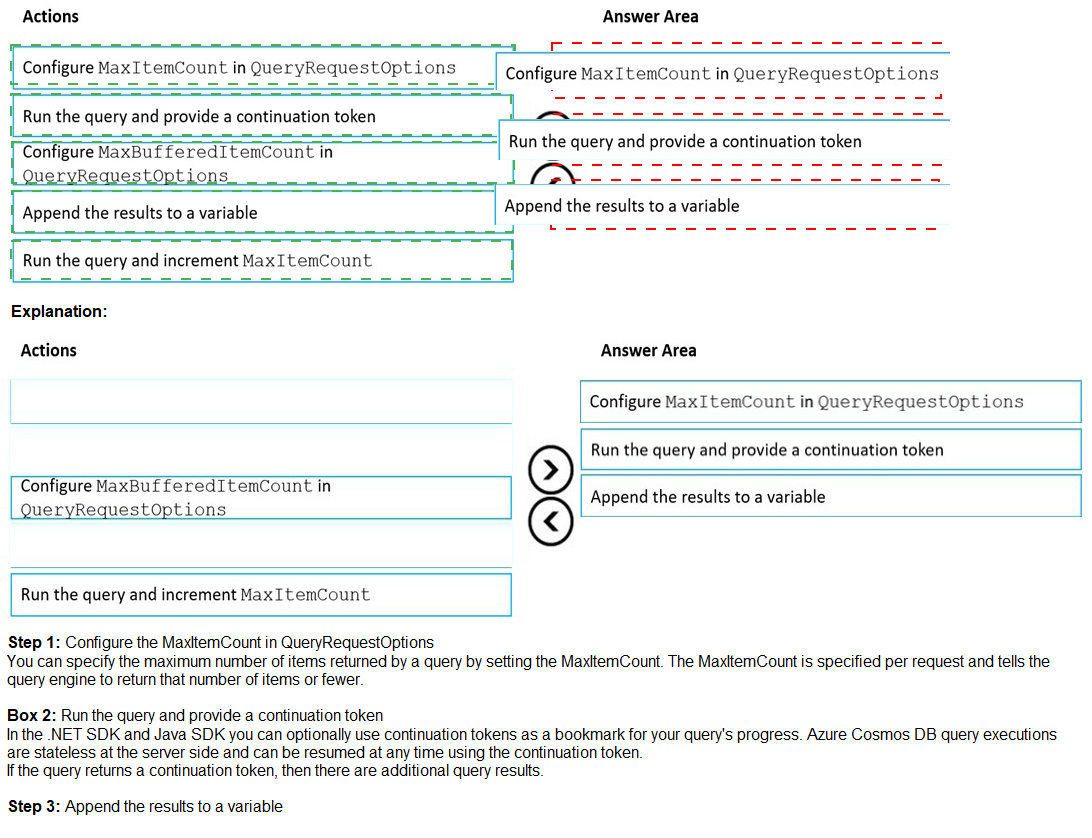

You have an Azure Cosmos DB Core (SQL) API account that is configured for multi-region

writes. The account contains a database that has two containers named container1 and

container2.

The following is a sample of a document in container1:

{

"customerId": 1234,

"firstName": "John",

"lastName": "Smith",

"policyYear": 2021

}

The following is a sample of a document in container2:

{

"gpsId": 1234,

"latitude": 38.8951,

"longitude": -77.0364

}

You need to configure conflict resolution to meet the following requirements:

For container1 you must resolve conflicts by using the highest value for policyYear.

For container2 you must resolve conflicts by accepting the distance closest to latitude:

40.730610 and longitude: -73.935242.

Administrative effort must be minimized to implement the solution.

What should you configure for each container? To answer, drag the appropriate

configurations to the correct containers. Each configuration may be used once, more than

once, or not at all. You may need to drag the split bar between panes or scroll to view

content.

NOTE: Each correct selection is worth one point.

You have an application named App1 that reads the data in an Azure Cosmos DB Core

(SQL) API account. App1 runs the same read queries every minute. The default

consistency level for the account is set to eventual.

You discover that every query consumes request units (RUs) instead of using the cache.

You verify the IntegratedCacheiteItemHitRate metric and the

IntegratedCacheQueryHitRate metric. Both metrics have values of 0.

You verify that the dedicated gateway cluster is provisioned and used in the connection string.

You need to ensure that App1 uses the Azure Cosmos DB integrated cache.

What should you configure?

A.

the indexing policy of the Azure Cosmos DB container

B.

the consistency level of the requests from App1

C.

the connectivity mode of the App1 CosmosClient

D.

the default consistency level of the Azure Cosmos DB account

the connectivity mode of the App1 CosmosClient

Explanation: Because the integrated cache is specific to your Azure Cosmos DB account

and requires significant CPU and memory, it requires a dedicated gateway node. Connect

to Azure Cosmos DB using gateway mode.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/integrated-cache-faq

You have the following query.

SELECT * FROM

WHERE c.sensor = "TEMP1"

AND c.value < 22

AND c.timestamp >= 1619146031231

You need to recommend a composite index strategy that will minimize the request units

(RUs) consumed by the query.

What should you recommend?

A.

a composite index for (sensor ASC, value ASC) and a composite index for (sensor ASC,

timestamp ASC)

B.

a composite index for (sensor ASC, value ASC, timestamp ASC) and a composite index

for (sensor DESC, value DESC, timestamp DESC)

C.

a composite index for (value ASC, sensor ASC) and a composite index for (timestamp

ASC, sensor ASC)

D.

a composite index for (sensor ASC, value ASC, timestamp ASC)

a composite index for (sensor ASC, value ASC) and a composite index for (sensor ASC,

timestamp ASC)

If a query has a filter with two or more properties, adding a composite index will improve

performance.

Consider the following query:

SELECT * FROM c WHERE c.name = “Tim” and c.age > 18

In the absence of a composite index on (name ASC, and age ASC), we will utilize a range

index for this query. We can improve the efficiency of this query by creating a composite

index for name and age.

Queries with multiple equality filters and a maximum of one range filter (such as >,<, <=,

>=, !=) will utilize the composite index.

Reference: https://azure.microsoft.com/en-us/blog/three-ways-to-leverage-compositeindexes-

in-azure-cosmos-db/

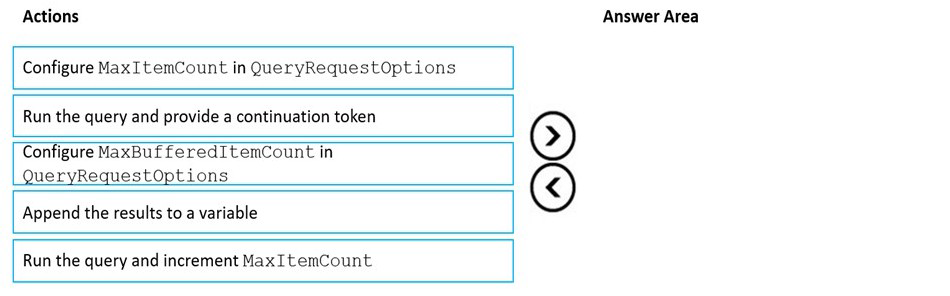

You have an app that stores data in an Azure Cosmos DB Core (SQL) API account The

app performs queries that return large result sets.

You need to return a complete result set to the app by using pagination. Each page of

results must return 80 items.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.